Golden ratio discovered in quantum world: Hidden symmetry observed for the first time in solid state matter

Quantum Gravity Research ?

Hi Don and all,

[Sorry, I was unable to join the meetup yesterday, and I will not be able to join today.]

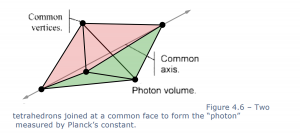

Indeed, Planck’s 1911 brilliant explanation of his interpolation equation is crucial to solve the conceptual confusion that leads to the paradox of the “photon” as a particle. The paradox is a direct function of the unexamined indeterministic philosophical assumptions underlying the confused interpretations.

When all the varied indeterministic worldviews are switched for a strictly Neo-Deterministic one, these evidence-based “conceptual goggles” allows us to see we had been confusing an event with an object: we register an all-or-nothing detection event simply because the atomic electronic vibrating shells are themselves harmonically quantized and because of the dynamics involved in a detection event cannot occur part-way between harmonics. Standing waving structures are inherently quantized.

If there is enough of rightly patterned incoming aetheric waves to cause a resonant response in the detecting unit, the incoming motion will become harmonically stabilized in the detecting unit and we will register an all-or-nothing threshold detection event, which unfortunately physicists interpret as a “particle”, with catastrophic conceptual consequences.

The whole of the conventional QM—Quantum Misinterpretations—is based on this truly-pervasive “particle-bias”, and the paradoxes disappear when you don’t look for particles that don’t exist. Waves are actually spread out and their “particle-like” threshold behaviour is a function of the harmonic resonant quantization of energy.

Don, you mention Eric Reiter. I have been in contact with Eric for many years know. I think that his research has the potential to really change things. Let me explain.

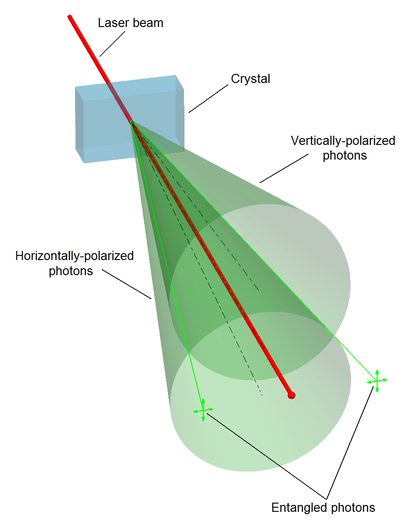

Eric performs the classic beam-split coincidence experiment, but with an overlooked and thus never-used-before source: gamma rays. He demonstrates empirically that, while light is indeed emitted in a quantized [aetheric] pulse, it does not stay quantized and is actually absorbed continuously. This result is consistent with the long-forgotten Planck’s Threshold Hypothesis (1911), and falsifies the notion of a “photon” as a discrete entity that travels through space. By the way, the totality of quantum effects are consistent with Planck’s Threshold Hypothesis, it is just this experiment using gamma rays that, still being consistent with Planck, is directly inconsistent with the photon.

Although his results ultimately do require independent verification (as every ground-breaking experiment does), I think he may very well be into something crucially important and truly paradigm-shattering. Such an independent experimental falsification of the “photon” as a particle, should change everything, at least in an ideal world where academic science still follows the self-correcting methodology of the scientific narrative. Of course, before bringing any sort of objection to his claims, it is important to go through all his material as he painstakingly reviews all the ways he was accused to be mistaken (whether on experimental or theoretical grounds).

The beam-split coincidence test for light closely resembles a simplified definition of the photon, as described by Einstein. The definition states that a singly-emitted photon’s worth of energy, an hf, must all go one way or another at a beam splitter (h = Planck’s constant, f = frequency). Amazingly, we are first to perform this fundamental test with gamma-rays. These tests with gamma-rays show that a singly emitted “light quanta” can cause coincident detection events beyond a beam-splitter, at rates that far exceed the accidental chance rate predicted by QM. Here we are saying light is emitted in a quantized pulse, does not stay quantized, and is absorbed continuously. You will see how our tests that defy QM imply that similar tests of past that were performed with visible light were not able to see through the photon illusion, and were just measuring noise. Our test does not split a gamma “photon” into two half-size detection pulses; it detects two full-size pulses in coincidence. It is two-for-one! This does not violate energy conservation; it violates the principle of the photon. The obvious explanation is the long abandoned accumulation hypothesis… a Threshold Model. Our work also explains why the accumulation hypothesis was abandoned.Light is emitted in a photon’s worth of energy hf, but thereafter the cone of light spreads classically. There are no photons. Light is classical. We explain our particle-like effects, such as the photoelectric and Compton effects, with newly understood properties of the charge-matter-wave in a Threshold Model.

I will cover all of this in my book.

Cheers!

On 22 January 2018 at 16:33, don mitchell <don86326@gmail.com> wrote:

Hi theVU/EU groupies,While running links on Planck’s constant, I discovered Planck released a second theory in 1911 (The Loading Theory) that attaches Planck’s fine-grain (of the universe) constant to qualities of the atom (matter) rather than light.The Second Quantum Theory is the name of a chapter in the book The Weight of the Vacuum by

The loading theory takes h

[Planck’s constant] as a maximum of action. This idea of action allowed below h is algebraically equivalent to “Planck’s second theory” of 1911.

(8,9,13,14

)There, and in Planck’s subsequent works, Planck took action as a property of matter, not light.

(9

)The unquantum effect implies that it was a false assumption to think h is due to a property of light.

“

[8] Planck M., “Eine neue Strahlungshypothese. 1911,” [Physikalische Abhandlungen und Vorträge], Carl Schütte & Co, Berlin, 2, 249-259 (1958) (see eq. 14).

[9] Planck M., [The Theory of Heat Radiation], Dover, 153 (1913).

[13] Kuhn T. S., [Black-Body Theory and the Quantum Discontinuity 1894–1912], Oxford University Press, 235-264 (1978).

[14] Whittaker E., [History of Theories of Aether and Electricity 1900-1926], 103 (1953).What stands out for me (Don) is my intuitively biased understanding that the atom’s structure, mass, and charge separations are responsible for the fine-grain qualities of the universe, verses something yet contended as a mystery in the mainstream notions of reality.

Pondering my belly button out loud,-donp.s.Answer: Rules:1) In turn, each will co-inform, by the understanding of each, on a selected group-topic to prime the group-mind, as each member’s responsibility to the group focus-effort.2) Verbally reflect in turn on connecting thoughts of each about the notions of others.3) Succinctly report in turn ensuing ‘Ah ha!’ moments concerning the group topic to the members.4) Drink about it (celebrate the natural high of group cohesion).5) Document (volunteers efforts) with a similar working social dynamic as 1), 2), and 3). 6) Repeat on evolving topics resulting from such group trust, inclusion, emerging enthusiasms.Question: What is the way to multiply group IQ by group-harmonic thoughts devoid of typical memberwise competitiveness?This answer above is informed by familiarity and practice with William Smith, Ph.D’s Appreciation, Influence, and Control Paradigm** (AIC), and prior participation in a similar facilitated discussion (which was magic).** See: http://odii.com/index.php as the AIC pardigm’s home site.Excerpt:“What does it take for each of us to conduct our life and work so that by doing what we do and being who we are we automatically contribute to the common good?

William E. Smith, Ph.D”———————————————————————–

|

|

|

||

|

||||

When watching the first couple of videos (for example), consider that those foundational Non-Newtonian concepts of complexity science—concepts like interconnectedness, interdependency, emergence over scale, self-organization over time, pattern formation, non-linear dynamics, feedback, chaos, collective behaviours, phase-transitions, networks, adaptation—may be just referring to deeper matter. Electricity in space also seems to be characterized by these concepts, and this is one of the crucial missing pieces in the EU paradigm. This indeed seems to be a case of “As Above, So Below”.

All this is exactly what the Neo-Deterministic Worldview expects. I will write about this in my book.

By the way, Bill Mullen was the only “EU guy” who felt strongly about the importance of the link between complexity science and electricity in space. See:

Oops! I forgot the link to the Complexity Theory Course, here it is:

On 20 January 2018 at 12:23, Juan Calsiano <juancalsiano@gmail.com> wrote:

Jim,

Let me provide some few comments:

1) The concept of “quantum entanglement” is a strict function of unexamined indeterministic assumptions proper to the consensual physical philosophy and its resulting particle-bias. The standard assumptions result in an incoherent conceptualization of the world. The Neo-Deterministic worldview precludes such nonsense.2) Complexity Science has been called “the science of the sciences” and the most important scientific development since modern physics. Complexity is based on strict determinism, and both emergence and chaos are deterministic (it’s a very common mistake to confuse chaos with non-causality). The randomness is only a function of subjective observer knowledge. To equate randomness with objective underlying physical causality is basically to assume non-causality, the core of the nonsensical Indeterministic Worldview. The Neo-Deterministic Worldview assumes a strictly causal boundless universe. In an unlimited universe, the totality must be ultimately unknowable.3) You know that I know that Newton was more than the usual exoteric representation of him. When I say “Newtonian” and “Non-Newtonian”, I am referring to the consensual agreement that most people accept about those words. History clearly shows that Newton’s followers were much more “Newtonian” than Newton himself. The same can be said about Einstein.4) The idea of self-similarity is extremely important, of course. That doesn’t mean that chaos (which has a strict definition and is purely deterministic) does not exist, of course it does (if unconvinced, you just talk with any capable meteorologist). Anyway, in terms of the Neo-Deterministic worldview, all observed chaos is limited to a given portion of matter and to a given bounded range of scales and conditions. One only needs to scale down enough in such chaos, and order inevitably will emerge again. Such orderly “cycling” deeper portions of matter of course are neither the limit of the scaling. In a Boundless universe, there is no ultimate level / scale, no ultimate portion of matter.5) You said: “So we end up with a world where every pattern is from random and chaotic motion at the bottom.” Again, the idea of chance or randomness as the ultimate cause of the world is fully-fledged Indeterminism. Evidence and coherence allow for the complete opposite worldview of Neo-Determinism, which assumes that the external world exists independently of the perceiving mind; that such material substance is endlessly divided and endlessly integrated, and that such inexhaustibly complex material substance moves at all the numberless scales of size, strictly causing all physical effects. In some specific situations and within certain range of conditions, the complexity of such causality may produce chaotic behaviour (e.g. Earth’s atmosphere), with an associated subjective unpredictability which is only a function of the observer’s necessary ignorance of the endless causes driving the behaviour.6) While “quantum entanglement” and “flower entanglement” are indeed indeterministic non-sense, the concept of non-isolation of material substance is an absolute key aspect of Neo-Determinism. When available, I suggest to focus on evidence that we can observe with our most direct instruments, i.e. our eyes. We have for example superfluids and plasma. In both cases the fluid at one side of a container (whether superfluid or plasma) “knows about” the properties of the material substance at the other side, a macroscopic distance away. This is actually the essence of why a superfluid is a superfluid and why a plasma is a plasma. With Neo-Deterministic assumptions, one must conclude that matter is one boundless all-pervading interconnected plenum. Superfluids and plasma demonstrate this concept in a dramatic way.7) You paragraph on Titius-Bode is very good, and greatly supports what I have just written. It is the neglected material interconnections between complex systems that makes the collective correlations seem “spooky” when they are just purely causal (again, I recommend you to see the youtube videos linked above). Both superfluids and plasma are absolutely interconnected on a deeper subtler aetheric scale, and that’s the reason for the dramatic collective behaviours that they demonstrate and that we observe with our eyes.

Cheers!

Juan

On 20 January 2018 at 01:08, Jim Weninger <jwen1@yahoo.com> wrote:

Juan,The non-Newtonian ideas of emergence and order out of chaos, are the larger cause of problems for the mainstream even now. It is these ideas that cause them to be stumped by how one random event “over here”, is related to another random event “over there”. For example, this crap:In the long past, was the idea of “as above. so below”. Newton caught the tail end of this thinking. The idea was simply this:the larger scale cycles (often in the heavens), drove the smaller scale cycles (right here on Earth). All the flowers opening in the morning, were caused by the sun coming up, NOT that all the flowers opening collectively caused the sun to rise. All the snow faling in the North was due to the seasonal cycle, NOT that the the falling snow CAUSED the seasons. Newton himself knew (even if he was totally wrong on the mechanism), that all the water rushing up on the beach was influenced by the moon’s position, NOT that the tides themselves influence the Moon’s orbit.Yet this move away from “as above, so below”, is what we have in our current very materialistic world view. Ideas of “higher powers” influencing us, smacks of religious idealism. So we end up with a world where every pattern is from random and chaotic motion at the bottom.Imagine if Newton and his predecessors had fallen into that trap way back then. We may have theories today that say that all the random and chaotic opening of flowers, DID cause the sun to rise. Then we also would have been stuck with the ideas of “flower entanglement”. That is, how on Earth did one flower over here “know” that another flower over there was opening. But this IS the mainstream view on the quantum level.And to make a future prediction based on this logic: We can start with the ideas coming from EU, that stars form along large scale filaments, and then orbits (Titius-Bode’s law) are determined from those filaments. Then we expect to find correlations between orbits in two solar systems (small scale) strung along one (larger scale) filament. On the other hand, if the mainstream idea is right (Titius-Bode is “coincidence”, and planetary orbital radii are in fact random), then we shouldn’t expect another nearby solar system to share the same pattern. And you can foresee the mainstream issue of how one collapsing gas cloud “over here” knows what’s happening in another gas cloud “over there”.Again, “as above, so below”, causes us to expect correlations on the lower scale. Starting with the idea of random and chaotic behavior at the bottom, will always lead to apparent “coincidences”, or, when coincidences become too unlikely too believe, will lead to nonsense about “entanglement”Isn’t that right?JimOn Friday, January 19, 2018, 4:37:51 PM MST, Juan Calsiano <juancalsiano@gmail.com> wrote:Jim,

The different possible answers to your last question are a strict function of the fundamental worldview of the physical world that you are assuming. The text written in the first email has an underlying worldview that has indeterministic aspects, like for example the Greek-Inherited philosophical assumptions about the nature of matter and motion that Newton took from the atomists, and that the author of such text seems to be accepting.

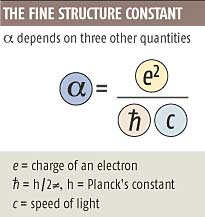

So what I will be proposing in my book is that we must first discuss and find an agreement on the most fundamental worldview of the physical world before having a conversation about very specific things (model-level) like nuclear structure, Planck’s constant, the fine structure constant, the concept of “forces”, etc. I further argue that the criteria to choose the most useful fundamental conceptual worldview should be (1) agreement with the totality of evidence and (2) internal non-contradiction between assumptions. Under such a strict criteria, I hope that I don’t need to argue here that the cartoonish assumed worldview underlying classical mechanics must be abandoned. We now know that the world is vastly more complex than that. Actually, according to the Neo-Deterministic worldview, it is inexhaustibly so.

Coming back to your question: through the years I have collected several possible specific model-level answers consistent with the general Neo-Deterministic assumptions that try to explain the reason of that seemingly “magical” number of 137.03599. That said, until we coordinate worldviews, it may be futile to discuss these alternatives, as you may still be thinking in terms of the classical worldview. And I really don’t want to get sidetracked, I am already behind schedule.Anyway, I’ll share with you here some suspicions. I think that the fine structure constant is intimately related to the dynamical moment-to-moment stabilization of organized material waving units such as anatoms.

Have you studied the extremely important Non-Newtonian notions of emergence and order out of chaos? Take out your scientific calculator and have a taste of self-organization:

Put your calculator in degrees and choose any starting number (be creative!).Repeat 6 times the following iteration of operations:cos(x)1/xe^xLog10(x)x/(0.06)No matter what is your starting number (chaos), the final number always converges to: 7.2973475 (order). Using the lexicon of dynamical systems and complexity theory, such result is an “attractor”.

Now you just need to take such attractor, take the reciprocal and multiply it by 1000. The final result is: 137.0360

Speed of light may have changed over timeOn 19 January 2018 at 00:31, Jim Weninger <jwen1@yahoo.com> wrote:Yet, where does that specific number come into play?

Sent from my iPhoneThanks!

The physically existent plenum does not allow for “photons”, though. What the experiments show is that electromagnetic radiation is physically emitted in discrete amounts of matter in motion quantified as hf (such discreteness emerges from the harmonics of the resonant physical structure of the emitting units), thereafter light spreads classically as continuous waves (as any capable RF Engineer intuitively knows), and such propagating continuous wave systems can be ultimately absorbed also continuously by detecting units until a threshold discrete level is reached and a resonant response / detection in the receptive unit is enacted (such threshold level is also directly related to the complex standing-wave harmonics of the resonant physical structure of the detecting units). Electromagnetic radiation exists only as continuous waves in a continuous medium, exactly as the founders of Electromagnetism explained.

In fewer words, once you adapt a conceptual framework based on strict Deterministic Coherence, the idea of a “photon” is precluded. Moreover, Eric Reiter’s experiments seem to directly falsify the idea. See: http://unquantum.net/and http://www.thresholdmodel. com/

On 18 January 2018 at 10:12, Jim Weninger <jwen1@yahoo.com> wrote:Don, Did you share this with Juan already? I’m forwarding it just in case you didn’t.Also: Stephen Boelcskevy <ouchbox@gmail.com>, student of reality, pro-videographer, and EU 2017 attendeeHello U’uns (hayseed for ‘you guys’),An excerpt from DJ’s new site, becomingborealis.com:From Divine Cosmos by David Wilcock…“There is a most profound and beautiful question associated with the observed coupling constant e – the amplitude for a real electron to emit or absorb a real photon. It is a simple number that has been experimentally determined to be close to 0.08542455. My physicist friends won’t recognize this number, because they like to remember it as the inverse of its square: about 137.03597 with an uncertainty of about two in the last decimal place. It has been a mystery ever since it was discovered more than fifty years ago, and all good theoretical physicists put this number up on their wall and worry about it.Immediately you would like to know where this number for a coupling comes from: is it related to pi or perhaps to the base of natural logarithms? Nobody knows, it is one of the greatest damn mysteries of physics: a magic number that comes to us with no understanding by man. You might say that the “hand of God” wrote that number, and “we don’t know how He pushed His pencil.” We know what kind of a dance to do experimentally to measure this number very accurately, but we don’t know what kind of a dance to do on a computer to make this number come out – without putting it in secretly. [emphasis added]”Reference on Znidarsic: A Tim Ventura interview (classic Znidarsic material): https://www.youtube.com/watch? v=6JiiQ22YC7Y

Per Mr. Z’s explanation, Newton was wrong, on at least one trivial assumption, that a corpuscle of light is absorbed into the structure of an atom instantaneously. Frank explains that Newton’s mathematical treatment of photon absorption was based on the understanding of the atomic model du jour, circa way back when. Newton, et al contemporaries, were not aware that the atom had internal structure let alone a nucleus.Newton’s groundwork mathematics on photon absorption is yet a part of the foundation of modern quantum physics, AND the modern model of atomic quantum transitions is yet based on instantaneous atomic transitions.Therein is the source of Planck’s constant, which universally occurs in modern physics, everywhere, because the modern atomic mathematical model is yet wanting of a proper model, where instantaneity is impossible, philosophically and experimentally. Planck’s constant appears in nature universally so long as any scientist universally applies a broken atomic model. Modern is as modern does. Mainstream scientists, traditionally and to this day, remain enslaved to a citational hierarchy they call infallible. And as such, the citational hierarchy of modernistic science is beyond falsification, therein by definition of science becomes pseudo-science.Differently, Planck’s constant, or the ‘fine-grain constant,’ is a modern kludge to balance a ‘classical’ equation based on an antique principle that atoms transition instantaneously bet ween energy levels (photon absorption/emission).Mr. Z. is ignored by the mainstream, as he dares to claim he has found the cause of Planck’s mystery kludge, to remain citationally aligned with I. Newton. I could go on.Comments?-don

|

|

|

||

|

||||

I would like to throw in that in concur with the notion that some “laws” and principles were defined in a time that some things we now consider standard knowledge was not even pondered yet. Meaning I usually point out that if we do not understand the atom and its fundamental properties and construct and the interaction mechanism we observe such as absorption/emission of photons we will never be able to “get it right”.

On Thu, Jan 18, 2018 at 3:21 AM, don mitchell <don86326@gmail.com> wrote:

Also: Stephen Boelcskevy <ouchbox@gmail.com>, student of reality, pro-videographer, and EU 2017 attendeeHello U’uns (hayseed for ‘you guys’),An excerpt from DJ’s new site, becomingborealis.com:From Divine Cosmos by David Wilcock…“There is a most profound and beautiful question associated with the observed coupling constant e – the amplitude for a real electron to emit or absorb a real photon. It is a simple number that has been experimentally determined to be close to 0.08542455. My physicist friends won’t recognize this number, because they like to remember it as the inverse of its square: about 137.03597 with an uncertainty of about two in the last decimal place. It has been a mystery ever since it was discovered more than fifty years ago, and all good theoretical physicists put this number up on their wall and worry about it.Immediately you would like to know where this number for a coupling comes from: is it related to pi or perhaps to the base of natural logarithms? Nobody knows, it is one of the greatest damn mysteries of physics: a magic number that comes to us with no understanding by man. You might say that the “hand of God” wrote that number, and “we don’t know how He pushed His pencil.” We know what kind of a dance to do experimentally to measure this number very accurately, but we don’t know what kind of a dance to do on a computer to make this number come out – without putting it in secretly. [emphasis added]”Reference on Znidarsic: A Tim Ventura interview (classic Znidarsic material): https://www.youtube.com/watch?v=6JiiQ22YC7Y Per Mr. Z’s explanation, Newton was wrong, on at least one trivial assumption, that a corpuscle of light is absorbed into the structure of an atom instantaneously. Frank explains that Newton’s mathematical treatment of photon absorption was based on the understanding of the atomic model du jour, circa way back when. Newton, et al contemporaries, were not aware that the atom had internal structure let alone a nucleus.Newton’s groundwork mathematics on photon absorption is yet a part of the foundation of modern quantum physics, AND the modern model of atomic quantum transitions is yet based on instantaneous atomic transitions.Therein is the source of Planck’s constant, which universally occurs in modern physics, everywhere, because the modern atomic mathematical model is yet wanting of a proper model, where instantaneity is impossible, philosophically and experimentally. Planck’s constant appears in nature universally so long as any scientist universally applies a broken atomic model. Modern is as modern does. Mainstream scientists, traditionally and to this day, remain enslaved to a citational hierarchy they call infallible. And as such, the citational hierarchy of modernistic science is beyond falsification, therein by definition of science becomes pseudo-science.Differently, Planck’s constant, or the ‘fine-grain constant,’ is a modern kludge to balance a ‘classical’ equation based on an antique principle that atoms transition instantaneously between energy levels (photon absorption/emission). Mr. Z. is ignored by the mainstream, as he dares to claim he has found the cause of Planck’s mystery kludge, to remain citationally aligned with I. Newton. I could go on.Comments?-don

https://youtu.be/Fsnakpt1vBY